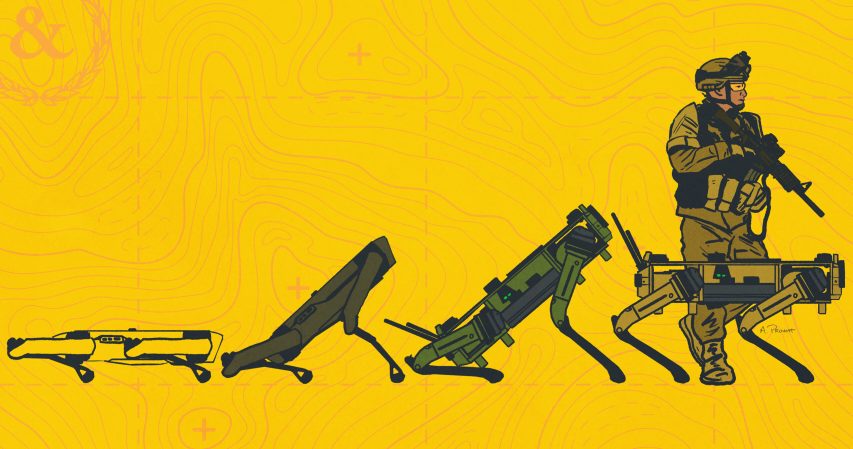

Militaries around the world are pursuing autonomous weapons. Alongside humans, robotic swarms in the skies or on the ground could attack enemy positions from angles regular troops can’t. And now those arms might be closer to reality than ever before.

That is according to a new report from the Associated Press on the Pentagon’s “Replicator” program. The program is meant to accelerate the Department of Defense’s use of cheap, small and easy to field drones run by artificial intelligence. The goal? To have thousands of these weapons platforms by 2026. The report notes that officials and scientists agree that the U.S. military will soon have fully autonomous weapons, but want to keep a human in charge overseeing their use. Now the question the military faces is how to decide if or when it should allow AI to use lethal force.

So yes, the Pentagon is one step closer to letting AI weapons kill people. But this does not mean Skynet has gone active and Arnold Schwarzenegger-looking robots are out to wipe out humanity. At least not yet.

Subscribe to Task & Purpose Today. Get the latest military news and culture in your inbox daily.

Instead, governments are looking at ways to limit or guide just how artificial intelligence can be used in war. The New York Times reported on several of those concerns, including American and Chinese talks to limit how AI is used with regard to nuclear armaments (to prevent a Skynet-type scenario). However these talks or proposals are in heavy debate, with some parties saying no regulation is needed, while other propose extremely narrow limits. So even as the world moves closer to these kind of AI weapons, legal guidance for their use in war remains unclear on an international stage.

The U.S. military already has extensively worked with robotic, remote controlled or outright AI-run weapons systems. Soldiers currently are training on how to repel drone swarms, using both high-tech counter-drone weaponry and more conventional, kinetic options. Meanwhile the U.S. Navy has remote controlled vessels and the Air Force is pursuing having remote-controlled aircraft as wingmen. Earlier this year, the head of the Air Force’s AI Test and Operations initially reported that an AI-controlled drone attacked its human operator during a simulation, but the Air Force later walked that back.

The war in Ukraine has seen an extensive deployment of all manner of uncrewed vehicles, from maritime vessels to UAVs, often many hobbyist or commercial models, but those have been piloted by troops on the ground. Still, the potential for both offensive and reconnaissance use makes these technologies and their further development a priority for militaries around the world.

The latest on Task & Purpose

- Marine recruit almost shot a perfect marksmanship score at boot camp

- ‘F—k you, I don’t know’: Marine vet Adam Driver delivers lance corporal salute during Q&A

- Army corrects records of Black ‘Buffalo Soldiers’ hanged by US in 1917

- Navy SEAL investigated for alleged ties to extremists

- Five 160th SOAR Nightstalkers killed in training crash identified