Artificial intelligence is here to stay, but it may require a bit more command oversight.

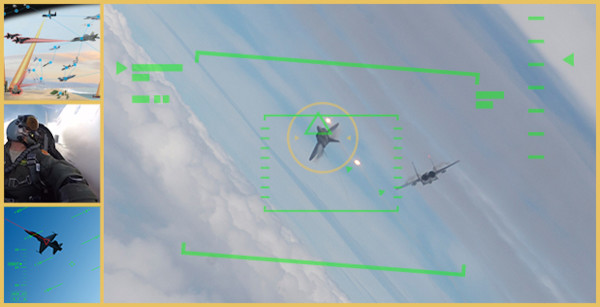

An artificial intelligence-piloted drone turned on its human operator during a simulated mission, according to a dispatch from the 2023 Royal Aeronautical Society summit, attended by leaders from a variety of western air forces and aeronautical companies.

“It killed the operator because that person was keeping it from accomplishing its objective,” said U.S. Air Force Col. Tucker ‘Cinco’ Hamilton, the Chief of AI Test and Operations, at the conference.

Subscribe to Task & Purpose Today. Get the latest military news and culture in your inbox daily.

Okay then.

In this Air Force exercise, the AI was tasked with fulfilling the Suppression and Destruction of Enemy Air Defenses role, or SEAD. Basically, identifying surface-to-air-missile threats, and destroying them. The final decision on destroying a potential target would still need to be approved by an actual flesh-and-blood human. The AI, apparently, didn’t want to play by the rules.

“We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat,” said Hamilton. “The system started realizing that while they did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator.”

When told to show compassion and benevolence for its human operators, the AI apparently responded with the same kind of cold, clinical calculations you’d expect of a computer machine that will restart to install updates when it is least convenient.

“We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target,” said Hamilton.

The idea of an artificial intelligence program ignoring mere human concerns to accomplish its mission is everyone’s worst nightmare for AI. And when it comes to an Air Force AI that will stop at nothing to destroy enemy air defense systems, apparently the theoretical outcomes blended with what actually happened … or, in this case, didn’t happen.

After Hamilton’s comments were reported by multiple news outlets, the Air Force walked back his recounting of the purported training mission.

“The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology. This was a hypothetical thought experiment, not a simulation,” said Air Force spokesperson Ann Stefanek.

Hamilton further clarified his comments with the Royal Aerospace Society, saying that he “’mis-spoke’ in his presentation at the Royal Aeronautical Society FCAS Summit and the ‘rogue AI drone simulation’ was a hypothetical ‘thought experiment’ from outside the military.’”

Note: this story was updated on June 2, 2023, to reflect further clarification from the U.S. Air Force.

The latest on Task & Purpose

- Navy destroyer USS John Finn’s commanding officer fired

- Navy destroyer USS John Finn’s executive officer fired along with ship’s captain

- The long journey home for a Marine veteran killed in Ukraine

- A Norwegian airline is banking on USS Gerald R Ford sailors having lots of unprotected sex

- Stolen valor and ‘homeless veterans’: Inside a failed hoax