In the future, police officers will patrol neighborhoods alongside a robot partner outfitted with sensors and a powerful artificial intelligence brain. The bot will be able to do everything the human can do, but instead of relying on “a hunch,” the police bot will scan the crowd, search for criminals, and predict when something bad is about to go down.

If it sounds like science fiction, that’s because it still is — at least, for now. Yet you can catch a preview of this futuristic world in the pages of Burn In, a new novel written by P.W. Singer, a strategist at New America, and August Cole, a former Wall Street Journal reporter. It’s the second techno-thriller from the pair with a knack for showing where the technology of right now may ultimately go in the future.

In their book Ghost Fleet, Singer and Cole examined a hypothetical war between the United States and China which prominently featured sophisticated cyber attacks ahead of a major brawl between the two great powers. It was something of a wakeup call when it was published in 2015 and has been on military reading lists ever since.

In Burn In, released this week, Singer and Cole take readers on a journey at an unknown date in the future, in which an FBI agent searches for a high-tech terrorist in Washington, D.C. Set after what the authors called the “real robotic revolution,” Agent Lara Keegan is teamed up with a robot that is less Terminator and far more of a useful, and highly intelligent, law enforcement tool. Perhaps the most interesting part: Just about everything that happens in the story can be traced back to technologies that are being researched today.

Task & Purpose spoke with one of the authors, P.W. Singer, about the book, science fiction, the ethics of robotics, and whether we’ll ever see wars fought between military robots. The interview has been lightly edited and condensed for clarity.

Task & Purpose: I read the book and I really enjoyed it.

I loved Ghost Fleet. And I don’t know if I can even compare the two because Burn In is such a different book, but it really builds upon a lot of what I liked about Ghost Fleet, which was taking technology right now, and basically extending it out years ahead.

Do you have a sense of where that is? Can you look in your crystal ball and say ‘this is going to be 2050, here is what it’s going to be like?’ Do you have that in your mind?

Peter Singer: We don’t set an exact date like that for a couple of reasons. One is that there’s so many other variables that might pop in to take something either off track or accelerate it. Those variables range from an economic recession to a war to a pandemic.

We’re seeing, for example, some areas excelling well past where people thought they would be years from now. For example, the field of telemedicine. That field is right now, after a couple of months of dealing with coronavirus, where the industry thought it would be 10 years from now.

Related: This Is Not How ‘Skynet’ Begins, Air Force Says of Artificial Intelligence Efforts

It jumped ahead that rapidly. And of course there’s other areas where it might be something that’s predicted to come on pace and it slows down. So that’s the first reason we don’t put exact dates on it.

The second is the Arthur C. Clarke 2001 problem. You put a date on it, and sometimes it doesn’t age well. So that’s the other reason that we don’t do that. But we give credit to Clarke in a different way through something he said.

Clarke was a great scientist as well as science fiction writer. He described how once you start to move more than a generation ahead, you start to move from the realm of science into the realm of magic. So that’s where we try and stay.

And you can see the subtle references to it, where the main character, Keegan, will reference some kind of device, be it an iPad or Phone for music from when she was young, right? Her parents listening to Nine Inch Nails. That gives you a tell that we’re not one year away, but we’re also not multiple generations away.

For military readers on Task & Purpose, something that I find hilarious is in defense acquisition plans and sometimes war games. For example, there’s a certain airplane where the plan is that we’ll be buying the last of them somewhere around 2080. And that’s literally written into the plan.

One, I really pity that poor pilot who’s getting into a plane designed in the 1990s in 2080, but you know, two, who are we using it to fight, you know, the Mesoamerican Empire or the Alpha Centauri aliens? I mean, it’s just so far out in the future that it’s not useful.

And that goes back to the overall idea of the book. It is what we call ‘useful fiction,’ or what August coined as FICINT, which is short for fictional intelligence. You know, just like, SIGINT, or HUMINT, it’s a tool of analysis and explanation, but the key is that it’s not just sci-fi. It’s got to be pulled from the real world. And that means real world setting — not another planet. It means real world action. For example, every cyber attack that takes place in Burn In is something that already happened in the real world, or it has been shown off by a hacker at a DEFCON convention.

Related: AI Weapons Are Here to Stay

It means also no “vaporware” — real world tech. The tech can’t be dreamed up. Either someone’s already using it, or it’s a prototype already being planned for deployment. And so that rule of real I think, grounds it, but it also hopefully adds to the excitement of it, where [readers get] to that holy crap moment of ‘wow,’ and then can go check the endnotes and [understand] that it really can happen.

I think what a lot of people really like about Ghost Fleet and Burn In is that they’re based in reality. They’re talking about this future world but with what now is nascent technology and showing what it could evolve into. In Ghost Fleet that was the Navy’s littoral combat ship and the F-35 fighter, in Burn In we’ve got robots and artificial intelligence.

The main robot character is called TAMS. Can you talk about the capabilities of TAMS and what those are based on?

Ghost Fleet came out in 2015, and literally today, one of the systems that we talked about back then that sounded like science fiction was tested on a US Navy ship. It’s a laser system to defend against drones and missiles. Back then it was prototype, and now it’s deployed out.

And that’s much of what we’re after with Burn In, is that the technology is either, as Gibson would say, unevenly distributed, or you just need to move the timeline forward a little bit.

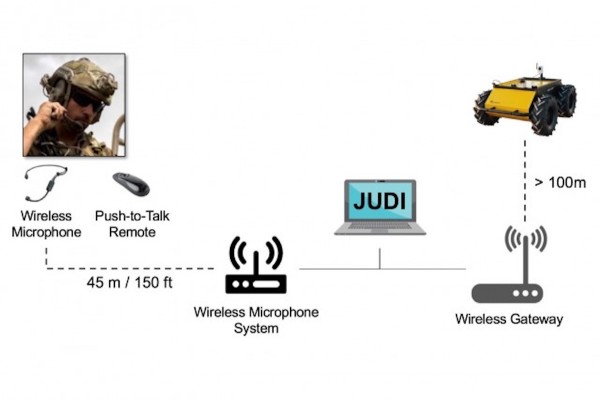

TAMS, short for tactical autonomous mobility system, is essentially the software side of Siri or Alexa moved slightly forward. And the hardware side are the robotics that people have seen deployed in the military right now [along with] the Boston Dynamics robot doing parkour — moved forward.

What that means is it also moves forward not just its capabilities, but also how we interact with it. And one of the fun things to play with is what that interaction means for the humans in the real world and the humans in the story.

TAMS is never said to be a character. It’s a tool. Just like a car is a tool, just like your toaster is a tool. But what is happening in the real world and that we also show in the story, is that we humans can’t help ourselves.

The main character, Keegan, is a former squad systems operator, which is a new role in the Marine Corps. You may get a kick out of this. Gen. [Robert] Neller is a big fan of Burn In. We sent him an early copy because he’s been so kind on Ghost Fleet, and besides loving the story, he gave us a really good blurb for Burn In.

Asked for his thoughts on Burn In, Neller, the former Marine commandant, pointed to his blurb review and told Task & Purpose “it is a great read and Mr. Singer, as he always does, makes the reader consider a future that is likely to become a reality faster than we can imagine.” Now back to Singer…

He also was particularly psyched that one of the reforms that he had pushed through — adding that role of [squad systems operator] in the squad — was in the story.

The point is that she’s someone who is experienced with robotic and unmanned systems, and knows their positives and their negatives.

And she tells herself, don’t be like those people that would give their blown up packbots a funeral or that would run out under heavy machine gunfire to rescue a [utility task vehicle] stuck in the mud — which are real world examples from Afghanistan and Iraq. Don’t be like that.

And yet through the course of the story, Keegan finds herself trusting and interacting with the robot in a different way. Protesters on the street interact with it a different way than they would an iPad in your hand. You the reader, though, also can’t help yourself, and you’ll find yourself treating TAMS as a character, and at certain points, like it.

Yeah. That’s one thing that I was really struck by is how TAMS interacts with humans. You make the robot a hero. In so many sci-fi books and movies, the robots are the bad guys. There’s the Terminator. 2001 and the murdering AI. But you take the opposite track. Are you more hopeful about AI and robotics? Do you think it’s going to be a net positive for humanity?

From the very start, with the 1920s play R.U.R., with the concept of the ‘robot,’ the word itself was created for that play as a mechanical servant that rises up. It’s always been a robot revolt storyline, from the Terminator, The Matrix, you name it. That would be fine if it just stayed within fiction, but it actually has a real world impact and we can see it in everything from the debate over “killer robots” that has taken place in everything from Pentagon policy to the floors of the United Nations, to scientists. You know, tycoons invested over $5 billion [fighting] the existential threat of robots.

The reality is we may have to figure out whether to fight or salute our metal masters one day, but during my lifetime, it’s not a revolt of the robots. It’s robotics revolution. It’s an industrial revolution going on all around us.

And industrial revolutions shape everything from business and economy to society and national security. You get new winners and losers on an individual level, on an organizational level, and on a national level. Think back to the last industrial revolution. We’re living through the sort of the equivalent right now, but maybe it goes a little further. Because with AI and robotics, it’s not just a story of this tool in someone’s hands.

Related: The inside story behind the Pentagon’s ill-fated quest for a real life ‘Iron Man’ suit

There’s the farmer dropping the shovel to pick up the hammer and go work at an assembly line, and then later, sitting behind a keyboard. But this is a tool that’s increasingly intelligent, so it does more and more on its own. And that means it affects all sorts of different roles, [including in] the military, for example the role of pilot and how we’re already starting to see that shaped by unmanned systems, to ones like the role of staff officer, or logistician, or lawyer.

Oxford University did a study of 702 different occupational specialties — almost all of which have their military parallel — and it found that 47% of jobs in the U.S. were at risk for replacement or displacement by these forces over the next 20 years.

So what I’m getting at is, going through one of these revolutions, it will have both good and bad effects. Whether it was the first stone that someone picked up or a drone, good guys and bad guys use them. That stone was used first to either grind something down to make it more edible or to bash someone else in the head. Drones are used by the military on the battlefield [and] are right now being used to deliver test kits to distant hospitals.

Part of the worry is that there’s this massive disconnect between how all these important forces are going on around us, and how well people understand them at the highest levels of power. Right?

The importance of AI and robotics is woven into everything from the national defense strategy to pretty much every Fortune 500 company. And yet, you know, you’ve got Secretary of the Treasury Mnuchin saying it’s not “on his radar screen” because it’s not going to be an issue for “50 to 100 years from now.”

Come on.

One of the things you brought up was job displacement. In the book you’re talking about this robot TAMS, who looks to be revolutionary for law enforcement. I can see other examples of this kind of robot going on patrol with me, a Marine in Afghanistan. With all its sensors, if I’m a grunt, it’s gonna be amazing.

But there are people in this book that are out of work where you wouldn’t think they would lose work: The husband is a lawyer. No jobs for lawyers anymore. AI is doing that. There are songs on the radio, and questions are raised in the book about whether they were written by a human or a machine.

Where do these jobs go? In one sense you’re talking about this great robot revolution, but the flip side is that it’s really horrible for a lot of people. People are just knocked out of the economy.

For the for the sci-fi fan, it’s not the world of Wall-E, but more like Gibson, so we’re not sitting back eating bon bons because everything’s great. And yes, you’ve got advanced technology, but you still have all of the issues of politics and economics and society, including, you know, inequalities and all of that. For the military people, you have friction, things don’t work out the way you planned, and the enemy gets a vote.

Bad guys have access to the technology or they are trying to find its vulnerabilities. And so that question of what is the role for the human is one of the questions of the book, but it connects to a larger issue, which is what human-machine teaming look like. We’re going to have this technology. That is inarguable. And I’ve got the endnotes to prove it.

The question is, what role will the machine replace? And that’s both the question of not just can it, but should it? And what roles will there be, and what does that team look like? And then most importantly, how do you train the humans for that?

That’s an issue for everything from doctrine, to the current and future of both U.S. military training, but also, what our kids are learning.

So if you want to ensure that the dystopian side of Burn In comes true, then keep on having plans that don’t assume enemies are going to go after new cyber vulnerabilities that are introduced, or keep having plans that dump massive amounts of data on the individual soldier or Marine at the pointy end of the spear, so that you’re not aiding them but instead flooding them with information. Keep on training our kids for jobs that aren’t going to be there in the world tomorrow, setting them and our nation up for a fall.

And so that’s the idea of it. For some people, [the book] is just going to be pure enjoyment. I hope they find it to be an amazing escape given everything going on right now, and I hope they fall in love with the characters. But I also hope that it can be not just merely on the nonfiction side an act of prediction, but also, prevention.

I hope by allowing people to visualize the world to come, they get a better understanding of key terms and key issues they’re gonna face. It will allow them to avoid some of those nightmare scenarios and take action so that some of the things in the book that maybe we don’t like, don’t come true. That’s, you know, that’s different than the normal goal of a work looking into the future.

There’s parts of the book that I think are cool and awesome. And I really look forward to that kind of tech.

And there’s other parts where if there’s a major problem that we got to deal with. The example that we had in the background of Ghost Fleet was the U.S.-China rivalry. If you go back to when we were researching and writing [that book], the whole world of both national security policy and even the fiction world was totally consumed by terrorism and insurgency studies.

And we were saying no, no, there’s going to be a return to great power rivalry with the rise of China. But it was also certain issues like, for example, supply chain security was something that we helped raise the discussion of. And what’s so great about fiction is that you can raise it in a way that actually hits with greater power, and is seen by more people all the way up to the highest levels.

I’ve had some nonfiction books that have done well on the military reading list, but it was Ghost Fleet that got me invited to brief at the White House Situation Room, and in The Tank, the conference room for the Joint Chiefs. It was Ghost Fleet that sparked investigation both within the Defense Department and by the GAO of these issues. Ghost Fleet is the one that has a $3.6 billion navy ship program by the same name.

Part of that is because no one ever said to someone else, man, this is such a good PowerPoint, you ought to read it on your next vacation. But fiction can do that. And what we’re trying to accomplish is to have the fiction drawn from real world issues and facts. So the person walks away from it both entertained, but also understanding a little bit more.

Let’s talk civil liberties. In the book Keegan walks around with ‘viz glasses’ that scan everyone’s face automatically, pointing out past arrest records in some cases. There are all kinds of other sensors, cameras, and technology that’s picking up on all these different signals that are getting swallowed up.

But where does the law and ethics come in, to push back on these things? It’s not just the viz glasses. There are also drones overhead all the time in this book. News drones, criminals, and even random people who just want to fly them and see what’s going on.

I found myself wondering, where are lawmakers on this? Will they ban drones or outlaw other items? Where do you see that debate going?

All those issues are already playing out in the real world right now. Whether it’s the facial recognition AI that allows you to match the person to identity but their life history. You may have seen you know, discussions a company that gave that app, so to speak, to some of its funders and leaders, and they were using it on parties and planes to identify. It’s called Clearview. The point is that it both opens up incredible opportunities but can also be weaponized against the individual or society writ large.

When you can just look at someone and then immediately pull back their entire life history. And with AI you cannot just pull back history, but you start to predict actions or even influence those actions.

Technology is moving at an exponential pace. But the law and policy is moving ahead at a glacial pace. So the disconnect becomes wider and wider.

Every time you get a new technology, you get new questions of law and ethics around it. And it’s the same with robotics and AI. But because this technology is something different, it’s an intelligent one, it means we get three types of questions that we really never wrestled with before.

One is the question of machine permissibility. What is it allowed to do or not? Not what is the human using it allowed to do or not, but what is [the robot] on its own allowed to do or not? What information is it allowed to collect? Or where is it allowed to go? Even who is it allowed to kill?

Related: A Brief Glimpse At The Use Of Robotics In Warfare

The second issue is machine accountability. Who owns it? And that means [its collected data] but also, who owns it if something goes wrong? From Tesla cars in the street that have gotten into wrecks and killed people to military deployment questions. There’s one system that’s an advisory in the tactical operations center that advises routes for people to go. Not based on time savings, but expected casualties. I’m the son of a JAG officer; that thing is a court-martial waiting to happen.

Then the third question is machine rights.

What are people allowed, not just to do with the machine, but do to the machine. So this is a strange way that the world of things like sex bots connects to military robots.

The U.S. military has already concluded that unmanned systems have the same right of self defense as manned platforms do. That if someone shoots at them — they have the right to shoot back — even though there’s not a human inside. In fact don’t even shoot: the US Air Force said even if it gets lit up with a targeting radar, it has the right to shoot a missile back.

That’s a debate straight out of sci-fi. And yet, it’s a real issue right now. And so these are important issues that we have to start wrestling with.

But we can’t say no that’s not going to be 50 to 100 years. No, we’re already seeing that now. And we also have to wrestle with them in a way that’s thoughtful and informed.

So that was the goal of the book is that people can enjoy that ride, but they also walk away from it thinking what ought we do about that?

One last question: You have this law enforcement example of TAMS in the book, detaining people, saving people just like a human police officer. Do we ever get to the point of deploying U.S. military robots to kill people or robots of another military?

Without a doubt. Look at all the technology being developed right now. Look at all the counter-drone technology being developed right now. And the reason is not just the U.S. military, but adversaries ranging from Russia and China to ISIS have deployed robotic systems already. So yeah, but I don’t see only robots fighting [happening anytime soon].

Because again, there’s certain things that machines are good at. And there are certain things humans are good at. And the question is really about the teaming.