According to a recent study by Matthew F. Cancian and Michael W. Klein of Tufts’ Fletcher School of Law and Diplomacy and the Brookings Institution, Marine officers have steadily grown less intelligent over the last 35 years as shown by a downward trend on the General Classification Test. Through incomplete statistical analysis, the authors claim a growing population of college graduates diluted the intelligence, quality, and effectiveness of Marine Corps leaders.

In the concluding paragraph, the authors ask and answer:

What has been the impact of this drop in quality on the effectiveness of the military? Answering this question is beyond the scope of this paper. Given the myriad studies associating performance with intellect, however, it is hard to imagine anything other than a seriously deleterious impact on the quality of officers and, by extension, on the quality and efficacy of the military.

Given this provocative language, and the evocative power of a downward sloping graph, it is perhaps not surprising that the report received coverage from press outlets like Bloomberg, Fortune, and the The Hill. It is unfortunate that such a hastily crafted study was synthesized and summarized for a wide audience without considering some important considerations that could confound their results.

As two former Marine Corps officers, we can cite personal experience of brilliant and unintelligent Marine leaders and find it plausible that Marine officer intelligence has declined over the last 35 years. However, our anecdotes do little to illuminate this generational trend, and contrary to their lofty claims, Cancian and Klein’s analysis provides few new reliable insights.

The General Classification Test was developed by a team of of psychologists commissioned by adjutant general of the War Department in the 1940s to match the right man with the right job during the World War II conscription. Among those jobs was serving as an officer, and the test provided a low-cost mechanism to identify those who would be successful in initial officer training.

The pencil and paper, multiple-choice test focuses on spatial and quantitative reasoning and in 1947 aimed to separate slow learners from rapid learners. As Walter V. Bingham, head of the project for the War Department, described in the journal Sciencein 1947, slow learners were those close to illiteracy who may have trouble reading and learning Army manuals. Rapid learners included scientists, engineers, editors, managers, and those who could serve as military leaders.

To be classified as a rapid learner, candidates had to achieve a minimum score of 110, which was required to attend initial officer training during World War II, unless they had a college degree. However, as Bingham wrote, “beyond a certain indispensable minimum knowledge and intellectual acumen, the factors which tend to differentiate the best officers from the very good are not measured with precision by means of tests of educational achievement or scholastic aptitude.”

Today, the test is no longer used to distinguish between rapid and slow learners or to qualify for Officer Candidate School. The General Classification Test is only administered to Marine officers who have already graduated and earned their commissions. The test was replaced by the Armed Services Vocational Aptitude Battery for all enlisted personnel in the 1970s, and every other service has discontinued its use for officers except the Marines. The score has no impact on a Marine officer’s career, is not part of an officer’s final grade at The Basic School, and has decreased in importance in deciding which military occupational specialty an officer will receive.

Despite the declining relevance of the test, Cancian and Klein attempt to use General Classification Test scores to compare officer competence, and at first glance, their data seem compelling. They show a distinct downward trend in average and maximum scores achieved by Marine officers from 1980–2014. The authors argue this trend is especially compelling since the test is not renormalized like other standardized tests, such as the SAT college exam and vocational aptitude battery test.

This is the first of Cancian and Klein’s faulty assumptions. It is not reasonable, let alone obvious, to assume that because the test does not change with changing times, it should be considered a reliable indicator for intergenerational intelligence comparison.

Any number of factors could explain the downward trend, including: Marine officers becoming less able to relate to the examples or language in the 70-year-old test questions; the American education system shifting away from the skills measured on the test; officers disregarding the test because it has no bearing on their careers; administrators of the test disregarding it because they also know it is irrelevant to the rest of the curriculum; or perhaps Marine officers are in fact becoming less intelligent. However, the authors ignore all of these competing theories in their analysis.

Of course, we cannot conduct an experiment to compare these hypotheses, because no one other than Marine officers have taken the test. If the rest of the American population had taken the test over the same 35-year span, we could determine if the declining Marine officer scores were due to generational changes, or something else. As it stands, we can’t say that the declining test scores have anything to do with the Marines uniquely. They just happen to be the ones taking the test.

Fortunately, there is a standardized test that was taken by a majority of both Marine officers and college-bound Americans writ large during the 35-year period in question. SAT scores would have served as a compelling control in the study for the purposes of comparing one generation of Marine officers to another, since generational changes would be better understood by considering how Marine officers compare to all Americans. If Marine officers are becoming less intelligent in the dramatic way the authors suggest, we should see support for that trend in SAT scores as well. Additionally, if the General Classification Test is a reliable measure of intelligence, there should be a reliable relationship between the scores of both exams across the sample.

To their credit, the authors do demonstrate that the declining scores were not correlated with an increase in diversity of the officer corps. This is an important point, and their evidence sufficiently rules out one possible explanation for the lower scores. However, this does nothing to rule out the very strong possibility that the declining scores over time are a product of the test itself, not the subset of people who take it. Without another group of test takers to compare to, the authors simply cannot control for this possibility.

Comparing intelligence across generations is difficult because society changes, and any study that asserts it can measure across generations with just one test should be read very skeptically.

Next, Cancian and Klein assert that a growing pool of college graduates decreases the average intelligence of that pool because comparatively more unintelligent people have the opportunity to attend college. As the authors explain,

The key point is that the pool of those attending and completing college has increased dramatically over time, increasing the pool of potential officer candidates. If the expansion of this pool over time is biased towards increasing those who were less well-suited for higher education, then the average intellectual ability of college graduates will decrease over time.

Visualize a rubber band dangling vertically. The top of the rubber band is the smartest person who graduated college and the bottom is the least intelligent. Over time, the authors assert, as more unintelligent people go to college, the rubber band stretches down, and the average college graduate is now a little less intelligent in an absolute sense.

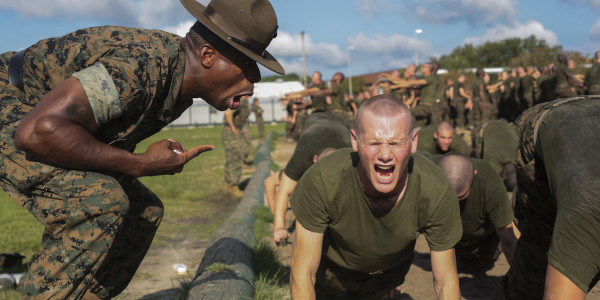

The authors’ mistake here is in assuming that a more diluted pool of college graduates will result in a more diluted sample of Marine officers. The only way Marine-officer intelligence could be impacted by this dilution is if the Marines pick candidates randomly from the pool with no consideration of their intellectual ability above the minimum requirement. The authors support this random selection theory by asserting that the Marine Corps is more concerned initially with physical fitness than intellect.

Again, perhaps this is true, but the report fails to test this hypothesis. Every potential officer candidate must submit an application that includes college transcripts, letters of recommendation, an essay, fitness scores, and standardized test scores to a selection board. In order for a diluted college pool to create a diluted officer corps, the authors would need to demonstrate that the board does not select officers based on the indicators of intelligence it has. Since none of the applicants have taken the General Classification Test at the time of their selection, the scores are a puzzling and poor indicator of selection priorities.

Beyond this causation problem, the theory does not fit the data. Based on Table 1 in the report, the lower-average test scores coincide with a large drop in maximum score by nine points and a smaller decline in minimum score by four points. Instead of the rubber band simply stretching down, the data suggest it is also being lowered from the top. Yet the author’s model assumes the top of the rubber band should remain constant, and offers no explanation for why the maximum score would change. The data indicate the smartest Marine officers have become worse at taking the test since 1980, but the paper ignores this fact.

This could be indication that the test is increasingly unreliable, or it could be the result of any number of other effects. However, it is not explained by a growing college population.

The intellectual skills identified on the General Classification Test as predictive of a strong officer during World War II are very possibly not be the optimal skills for today. Trends in asymmetric warfare, insurgency, technology, and interaction with civilians have likely changed what makes an effective officer, and the Marine Corps constantly revises its training to stay up to date with these priorities.

If the authors are interested in rigorously analyzing the long-term trend in officer quality, they should consider many more variables. We’ve mentioned changes in SATs, but one should also consider changes in where officers went to college and grade-point average, changes in the The Basic School curriculum and academic exam grades, and changes in performance at other downstream officer schools like Expeditionary Warfare School, Command and Staff Academy, or the war colleges.

As mentioned above, comparing intelligence between generations is very difficult. Further assuming how one measure of intelligence relates to today’s warfare and military leaders is even more problematic.

Cancian and Klein have tackled an important and difficult issue, and we welcome more rigorous discussion. As the Marines consider important issues around the future of the force, more careful and complete analysis is paramount to maintaining a smart Corps.