Fake videos have become such a potentially disruptive threat that the high-tech research arm of the Pentagon is launching a contest in early July aimed at detecting “deepfakes,” hoax videos so realistic that they could trigger political scandal or even spark violent conflict.

Some 10 teams from major U.S. universities across the United States and Europe will compete in a two-week contest to devise techniques to distinguish between fake and real videos.

“The goal is to provide the general public — a set of tools that we can use to verify images, video and audio,” said Siwei Lyu, a computer scientist at the University at Albany that leads one of the research teams taking part in the contest, sponsored by the U.S. Defense Advanced Research Projects Agency.

Fake videos, sometimes known as deepfakes, harness artificial intelligence and can be used to place people where they did not go, and say things they never said. As fake videos improve, they could rock both people and nations, even inflame religious tensions, experts said.

“The rate of progress has been astounding,” said Jeff Clune, an expert on synthetic videos. “Currently, they are decent, and soon they will be amazing.”

Fake videos already circulate. Last month, the Flemish Socialist Party Sp.a released a fake video of President Donald Trump addressing the citizenry of Belgium.

“Belgium, don’t be a hypocrite. Withdraw from the climate agreement. We all know that climate change is fake, just like this video,” the altered image of Trump says.

While that spoof video is crudely done, with Trump’s lips not exactly matching the audio, researchers say more professionally done fake videos are around the corner, so much so that an informal wager has broken out among a handful of them over whether a fake video will draw more than 2 million views by the end of 2018 before it is shown to be a hoax, according to IEEE Spectrum, a professional journal for electrical engineers.

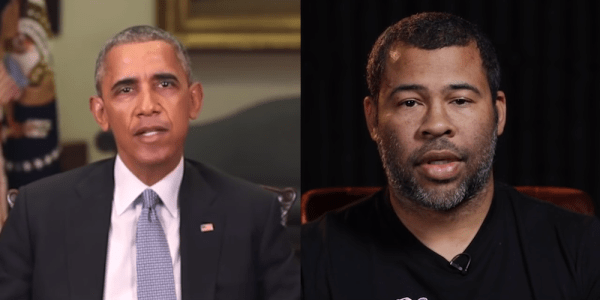

In April, the comedian and director Jordan Peele made a fake video of former President Barack Obama seemingly warning people about fake news.

IEEE Spectrum noted that a variety of fake videos came to the fore last December when a person going by the pseudonym Deepfakes showed how one could use AI-powered software to place the faces of celebrities on the bodies of porn stars. An explosion of amateur deepfake porn videos began to appear.

The advent of open-source artificial intelligence tools, such as Google’s TensorFlow and other programs, have supercharged experimentation, and while porn took the lead in fake videos, experts said the technology will have a gamut of both positive and negative uses.

“The most interesting progress that we are seeing right now is around the forgery of faces … and the forgery of moving your lips to speak. These are actually two very powerful capabilities,” Gregory C. Allen, an AI and national security expert at the Center for a New American Security, a Washington think tank, told McClatchy.

Fake audio recordings, made by collecting hours of someone actually speaking, then remixing the phonemes, or distinct units of sound, are improving but still largely detectable as fake. One popular site is Lyrebird, which can mimic almost any voice.

“Right now, they sound tinny and robotic,” Allen said, but within five years audio recordings will “start to fool forensic analysis,” and fake video is on a similar track.

Some experts say the timeline may be shorter, and that video manipulators have the upper hand over forensic experts seeking to debunk fake videos.

The DARPA contest is part of a four-year Media Forensics program that brings together researchers to design tools to detect video manipulations and provide details about how the fakery was done. Teams scheduled to take part in the contest include ones from Dartmouth, Notre Dame and SRI International, once known as the Stanford Research Institute. The deadline for fake videos to be delivered to them for their analysis was Friday.

They will “have about two to three weeks to process the data and return their system output” to the National Institute of Standards and Technology, a federal agency in Gaithersburg, Md., that is working with DARPA, computer scientists Haiying Guan and Yooyoung Lee said in a statement to McClatchy.

The goal of the teams, they said, is “to develop and test algorithms that can be used in media forensics so that fakes and real videos can be properly recognized.”

Scientists worry about the mayhem fake videos could cause. Clune said he could foresee a stock market manipulator creating a fake video of an airplane crashing designed to hurt the stock of an airplane manufacturer.

“You could create a wave of media. You could create that video and you could create a video of newscasters talking about it that looks like CNN or Fox,” he said, followed by faked news stories. Even if the cascade of forgeries fooled people only briefly, it would be “long enough for you to make a profit.”

Forged videos are certain to find their way into politics.

“People already are kind of predisposed to believe damaging material about somebody that’s of the opposite political party,” said Scott Stewart, vice president of tactical intelligence at Stratfor, a geopolitical forecasting firm in Austin, Texas. “Imagine those sorts of things amped up on steroids due to this new technology.

“You can say it’s fake but people will say, ‘I saw it with my own eyes.’”

Trump has underscored the power of social media to draw attention to controversial videos. Last November, he retweeted three videos rife with anti-Muslim sentiment from a British far-right group. While the videos were not fakes, Trump’s retweeting of them drew expressions of outrage from Prime Minister Theresa May and others.

Clune, the AI video expert, said the technology could be used in fields like education and entertainment. But the potential downsides are formidable.

“The negatives that worry me the most are that probably we will have a complete and total lack of trust in images and video going forward, which means that there will be no smoking gun video that, say, sets off a wave of interest and opposition to police brutality or government crackdowns or a chemical weapons attack,” Clune said. “In the past, these sorts of images and video catalyzed change in society.”

———

©2018 McClatchy Washington Bureau. Distributed by Tribune Content Agency, LLC.